ST. LOUIS—An apparent robocall that used artificial intelligence to mimic President Joe Biden's voice and discourage New Hampshire voters from coming to the polls during last month’s primary election has not moved state and federal lawmakers to take action in nearly the same way they have after singer Taylor Swift was victimized in recent days by pornographic and violent deepfake images.

With the 2024 political cycle well underway, it’s unclear if new state or federal efforts to address the use of artificial intelligence in campaigns will be in place in time to make a difference, or if they would effectively address bad actors based overseas.

The message a trio of Missouri organizations shared late last month with their members: Candidates and their campaigns need to be constantly monitoring social media to protect themselves, and voters need to be ready to do their own research and resist temptation.

“Whether or not it’s generated by AI, we all ought to be tethered to the truth instead of trying to be influenced by stuff that isn’t accurate or isn’t factual. We’re concerned by those tools that are out there that could impact a community that’s unaware that AI’s taken this to a more realistic level than what we’ve seen in the past,” said Steve Hobbs, Executive Director of the Missouri Association of Counties in a webinar that also included the Missouri School Boards Association and the Missouri Municipal League.

“I know sometimes it's very easy to see and just hit share, and instead if you see something that doesn’t seem right, and with artificial intelligence sometimes it's very difficult to know, but verify the facts, before you repost,” Hobbs said. That means contacting local election authorities, and asking candidates, particularly those who are local and more accessible. “Talk to the candidate. Candidates also need to be very vigilant about monitoring what is being said during their race and what is not being said,” Hobbs added.

What's at stake? Potentially, an election result that won't be accepted and breed disputes, said Richard Sheets, Executive Director of the Missouri Municipal League.

Facebook and Instagram will require political ads running on their platforms to disclose if they were created using artificial intelligence, their parent company announced in November.

Under the new policy applied by Meta worldwide, labels acknowledging the use of AI will appear on users’ screens when they click on ads.

Meta’s new policy covers any advertisement for a social issue, election or political candidate that includes a realistic image of a person or event that has been altered using AI. More modest use of the technology — to resize or sharpen an image, for instance, is allowed without a disclosure.

Google unveiled a similar AI labeling policy for political ads in September. Under that rule, political ads that play on YouTube or other Google platforms will have to disclose the use of AI-altered voices or imagery.

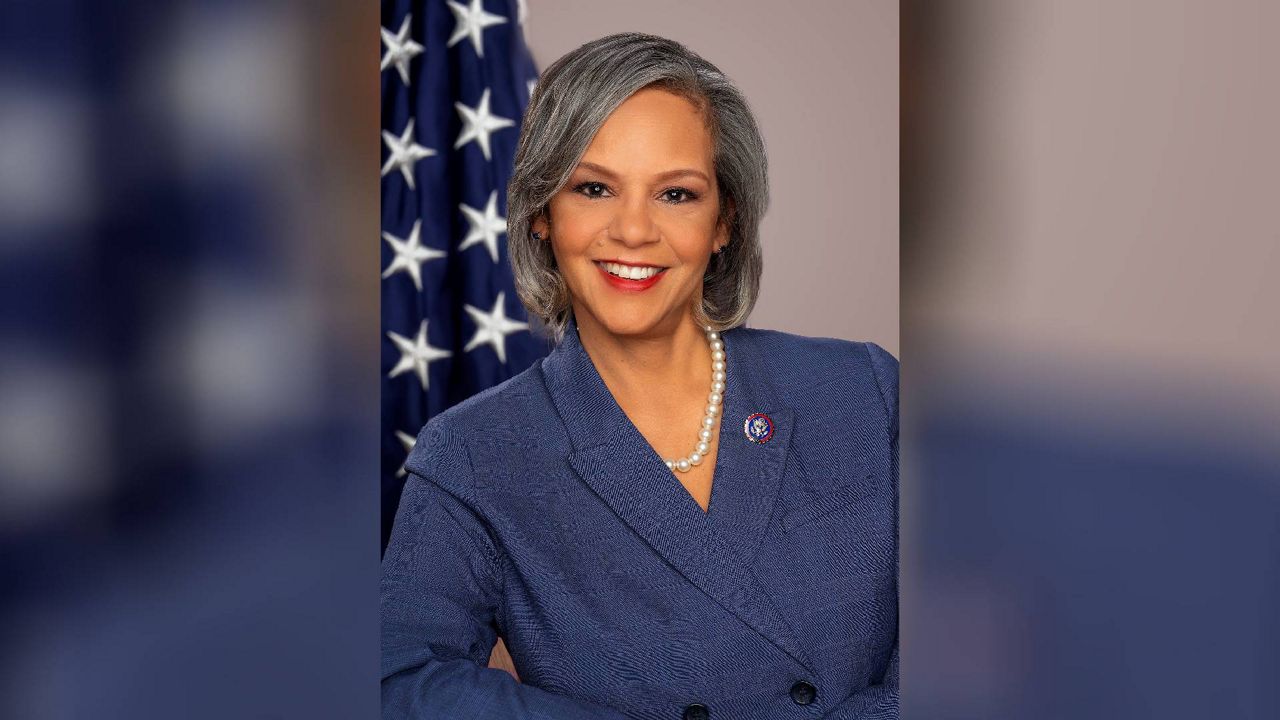

Legislation that would require candidates to label campaign advertisements created with AI has been introduced in the House by Rep. Yvette Clarke, D-N.Y., who has also sponsored legislation that would require anyone creating synthetic images to add a watermark indicating the fact.

Clarke said her greatest fear is that generative AI could be used before the 2024 election to create a video or audio that incites violence and turns Americans against each other.

“It’s important that we keep up with the technology,” Clarke told The Associated Press last May. “We’ve got to set up some guardrails. People can be deceived, and it only takes a split second. People are busy with their lives and they don’t have the time to check every piece of information. AI being weaponized, in a political season, it could be extremely disruptive.”

The Federal Election Commission is reviewing public comments submitted on a proposed rule to govern ads involving the use of artificial intelligence, an agency spokesperson confirmed to Spectrum News. The rule-making process could be resolved by early summer, FEC Chairman Sean Cooksey said in a statement to the Washington Post last month also provided to Spectrum News.

“Any suggestion that the FEC is not working on the pending AI rulemaking petition is false. The Commission and its staff are diligently reviewing the thousands of public comments submitted. When that process is complete, I expect the Commission will resolve the AI rulemaking by early summer.”

On Jan. 30, State Rep. Adam Schwadron, R-St. Charles, announced he would file HB 2573, “The Taylor Swift Act”, which would let victims of deefake images bring civil action in court.

Schwadron, also a GOP candidate for secretary of state, said he’s been approached about amending his bill to also address political advertising, but said it’s “too self-serving to me to tackle that before tackling the issue in my bill.”