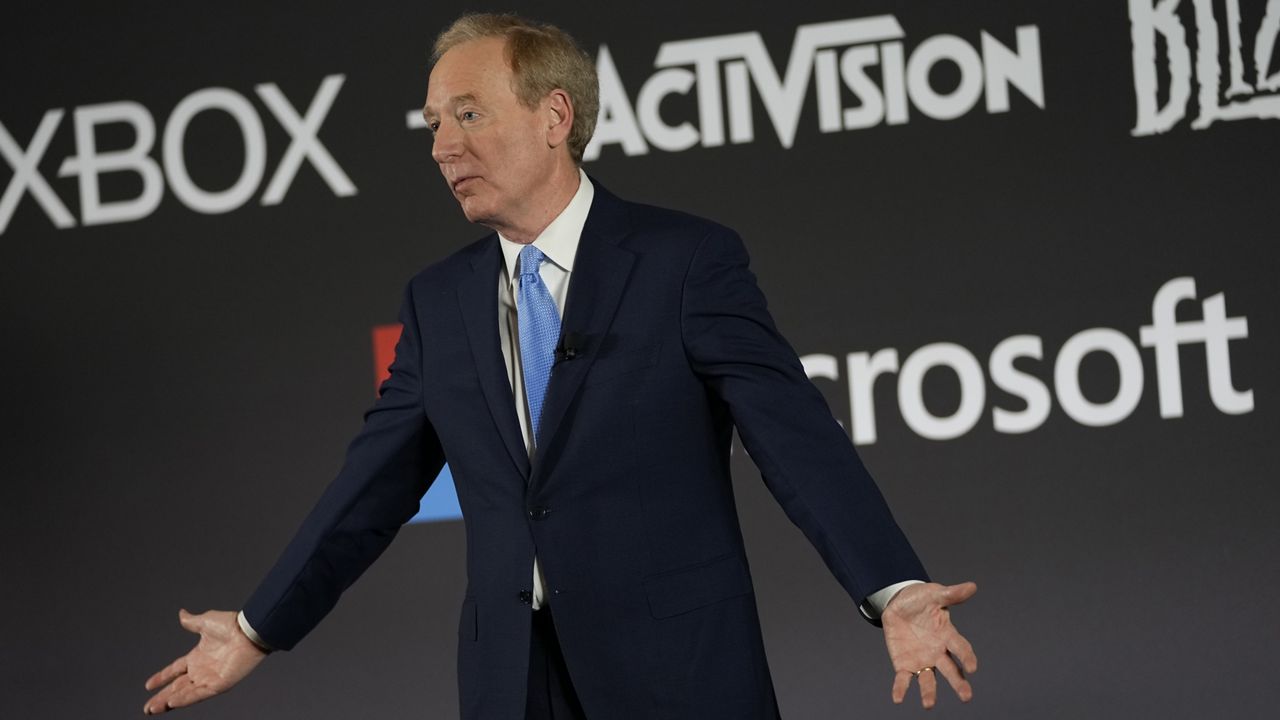

In the latest in a string of warnings from tech executives about artificial intelligence, Microsoft President Brad Smith on Thursday said “deepfakes” are his biggest concern about AI.

Deepfakes are AI-generated video, photos or audio — that can, often convincingly, make it seem like someone said or did something they didn’t.

What You Need To Know

- In the latest in a string of warnings from tech executives about artificial intelligence, Microsoft President Brad Smith on Thursday said “deepfakes” are his biggest concern about AI

- Deepfakes are AI-generated video, photos or audio — that can, often convincingly, make it seem like someone said or did something they didn’t

- Speaking to a group of government officials, members of Congress and policy experts Thursday in Washington, Smith said he fears that deepfakes will help create more disinformation

- During Thursday’s speech, Smith presented Microsoft’s five-point blueprint for the public governance of AI

Speaking to a group of government officials, members of Congress and policy experts Thursday in Washington, Smith said he fears that deepfakes will help create more disinformation.

"We're going have to address the issues around deepfakes,” Smith said, according to Reuters. “We're going to have to address in particular what we worry about most foreign cyber influence operations, the kinds of activities that are already taking place by the Russian government, the Chinese, the Iranians.

“We need to take steps to protect against the alteration of legitimate content with an intent to deceive or defraud people through the use of AI.”

During Thursday’s speech, Smith presented Microsoft’s five-point blueprint for the public governance of AI.

Among his proposals is that AI-generated content should be labeled so the public is aware of where it came from and new laws should be enacted to hold creators accountable.

Smith’s plan also calls for safety brakes for AI systems controlling critical infrastructure — including power grids, water systems and city traffic control systems — as well as a new government regulatory agency and public-private partnerships to conduct “important work” needed “to use AI to protect democracy and fundamental rights, provide broad access to the AI skills that will promote inclusive growth, and use the power of AI to advance the planet’s sustainability needs,” he wrote in a paper published on Microsoft’s blog.

“As technological change accelerates, the work to govern AI responsibly must keep pace with it,” Smith wrote.

Smith is far from alone in warning about the risks of AI, which tech executives also argue has immense potential to improve the world, including by making scientific breakthroughs and creating tools that make people more efficient at their jobs.

But critics argue it could also steal people’s jobs, be used to create disinformation or spiral out of the control of humans if systems are allowed to write and execute their own code.

Earlier this month, Geoffrey Hinton, the computer scientist known as “the godfather of AI,” resigned from Google so he can speak out against the dangers of the technology he helped pioneer. Among Hinton’s concerns is that the internet could become flooded with fake images and text and the average person will “not be able to know what is true anymore,” he told The New York Times.

Last month, Google CEO Sundar Pichai conceded in an interview with CBS News’ “60 Minutes” that AI will make it easier to create fake news and fake images, including videos.

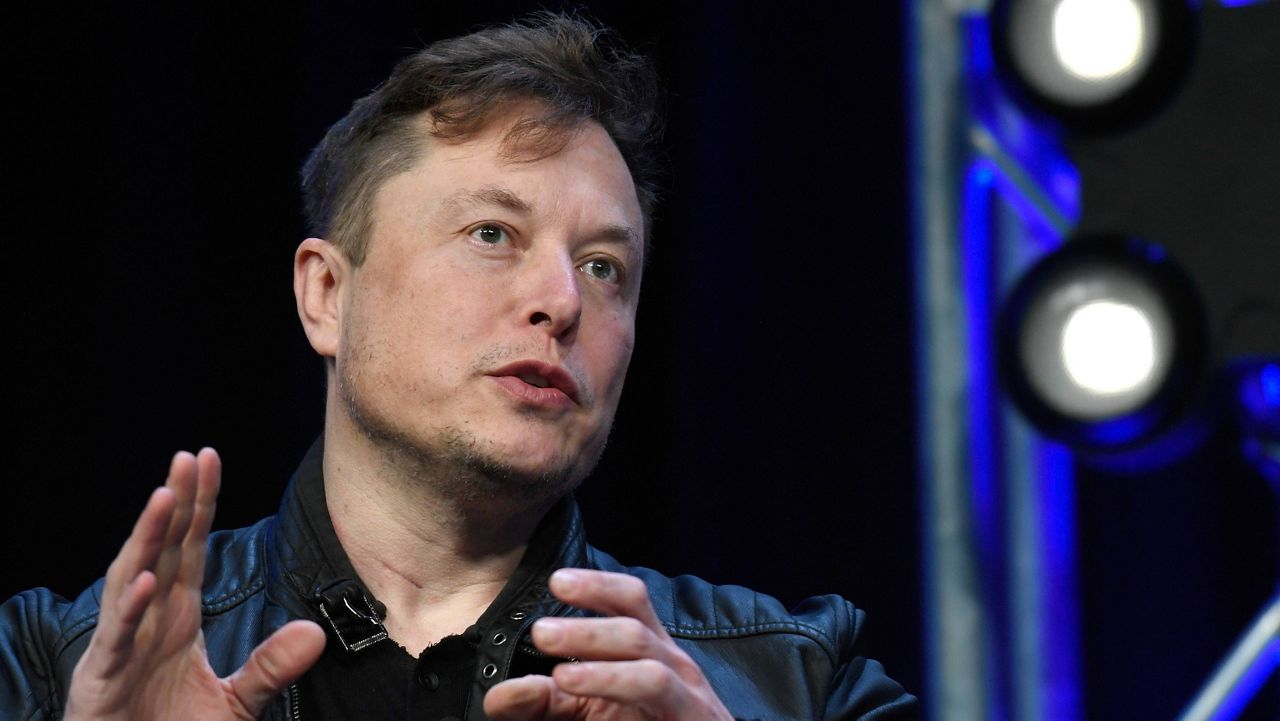

In March, more than 1,000 technology leaders and researchers, including SpaceX, Tesla and Twitter CEO Elon Musk and Apple’s Steve Wozniak, wrote an open letter calling for companies to pause for six months development of AI systems more powerful than OpenAI’s latest GPT-4 release.

“Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth?” the letter said.

Last week, executives with OpenAI and IBM told a Senate subcommittee they support government regulation of AI.

OpenAI CEO Sam Altman said he believes the tools developed and deployed by his company “vastly outweigh the risks, but ensuring their safety is vital to our work.”

“If this technology goes wrong, it can go quite wrong,” he said.

On Tuesday, the Biden administration issued a request for public input as it works to develop a national strategy governing artificial intelligence.

Ryan Chatelain - Digital Media Producer

Ryan Chatelain is a national news digital content producer for Spectrum News and is based in New York City. He has previously covered both news and sports for WFAN Sports Radio, CBS New York, Newsday, amNewYork and The Courier in his home state of Louisiana.