Imagine a therapist that’s always there, always alert, and that responds within a matter of seconds.

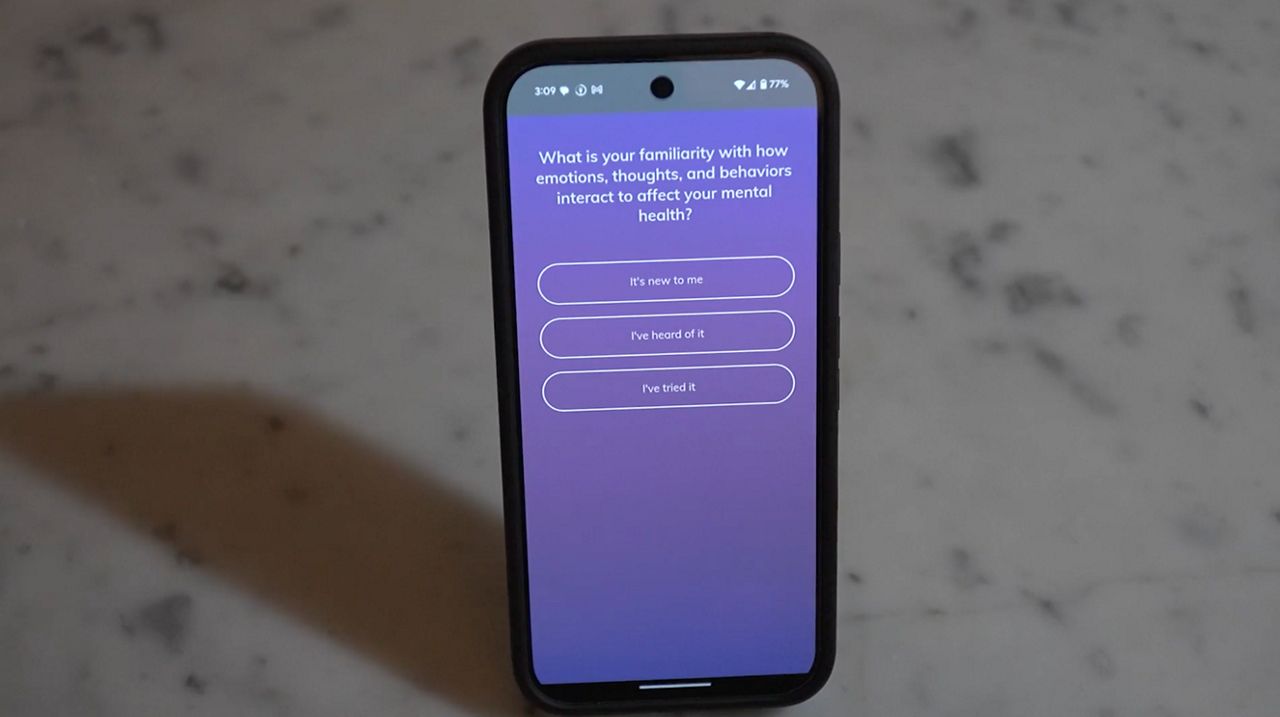

But it’s not a real therapist — it’s a chatbot. In the past several years, a number of apps have emerged that focus on using artificial intelligence to improve users’ mental health. The one we tried out is called Youper.

The app was founded in 2016 by psychiatrist Dr. Jose Hamilton.

“One of our recurring themes in our feedback was it’s extremely hard to find time for myself, but with Youper, I can just sneak sometimes on my own into my bathroom and have a five minute conversation that will unload a lot of my negative feelings, help me process my emotions,” said Hamilton.

Hamilton wanted to make mental health care available to more people.

“We see Youper as a first step when you’re struggling with an emotional health issue,” Hamilton said. “[It’s] a tool that removes, removes the stigma, removes barriers like access, cost, if you are covered or not by insurance. Youper is extremely affordable.”

Mental health apps can also help fill gaps — as of December 2023, more than half of Americans lived in an area with a shortage of mental health professionals.

Dr. Russell Fulmer, a professor and director of Graduate Counseling Programs at Husson University, researches AI in the behavioral science.

“When faced with the reality of receiving no support or some, however imperfect, AI could be a piece of that puzzle,” Fulmer said.

The capabilities of AI to provide mental health support have proven promising.

In a recent study, ChatGPT and trained therapists were each asked to provide responses to prompts, and people could barely tell the difference. Respondents guessed correctly that a human wrote the response only 5% more often than they correctly guessed that ChatGPT wrote it. Also, ChatGPT was seen as more empathic, culturally competent, and connecting.

“ChatGPT is, I'm going to anthropomorphize here, but it's, you know, it's very smart and capable and smarter than us in so many ways. And it can work in two seconds,” Fulmer said.

Even still, Fulmer isn't worried that therapists will be out of a job anytime soon.

“I think some people will always want that, I know I'm talking to a human being, flesh and blood human being,” Fulmer said.

Hamilton agreed, saying, “When we are having a conversation and engaging with a patient, with a client, with a friend, with a loved one, we bring with us all this history of what we are. And I think this is the main difference between talking to, to a human and talking to an AI.”

In fact, Hamilton said Youper works particularly well when used alongside therapy; many users actually come to the app at the recommendation of their therapists.

“Sometimes you see a therapist one week, and then you need to wait for another week, like to talk to your therapist again. And Youper can be used in conjunction, it’s something that you can use in the middle,” Hamilton said.

But A-I chatbots are not without their risks. Data security is a concern -- as the apps hold large amounts of personal information.

if there was a data breach and that information got out, that would be especially on a large scale, I think, big enough to serve as an impetus, maybe to some laws or more regulation.

Also, there is concern about people becoming too attached to AI chatbots. Tech company Character.AI has been sued by parents who alleged the app harmed their children – in one case, a teen committed suicide after developing a close relationship with the bot. Character.AI has since said it’s taking steps to prioritize safety.

Notably, Character.AI is not a therapeutic chatbot. Hamilton says Youper is careful to put guardrails in place for its users.

“Youper was programmed and designed to be safe,” he said. “Youper will never, for example, give you provide diagnosis or offer treatment or offer treatment advice or try to create or engage in a relationship.”

Fulmer and Hamilton both stress that as AI gains more capabilities – it's important that companies minimize harm -- and harness the technology to do good.

“Where there's the potential for great promise, there's also the potential for great peril. The promise of AI depends on proper ethics implementation,” said Fulmer.

“This will be everywhere,” Hamilton said. “And it's up to us. Especially mental health professionals in tech, tech companies in this field that are creating this technology as well to make sure that we are creating something that’s safe and that will be beneficial.”

Safe, beneficial, and always close at hand — that's quite a prescription for therapeutic chatbots.